Lily Tomlin lives under the Atlantic Ocean

I love improvisational loop sessions with my Boss RC-202. And one of my favorite ways to get song ideas and lyric ideas is by using Trivial Pursuit cards as random prompts. This one gets a little weird.

Whatever it is, the way you tell your story online can make all the difference.

Experimental Short Films

I’ve wanted to make some fun, experimental short films for a while now. Nothing with a literal narrative story, just abstract motion pieces. Visual poetry. I’ve wanted to play around with this idea for long while now, but something else always comes along that seems more important.

So in the past few weeks I decided to simply dive in. But of course in my scattered way, I ended up making little bits for a bunch of short films, instead of committing to getting one going. Normally i’d be mad at myself for this, but now i’m realizing that it’s just who I am as an artist. A scatter-brained multi-passionate tiger can’t change his stripes.

On my phone I have this app called FotoDa. It’s a digital photo collage app, that pulls images from your phone camera album and mashes them together. Any time I have downtime I end up generating a handful of fun collages.

I started going through my backlog of the collages and seeing how I could bring them to life with motion. They seemed abstract enough for me to not get caught up in story.

I have a bunch of other apps in my phone that I can run images through, some distort them, some are more like filters. So that was my next phase, send the phot collage through some of these other weird apps.

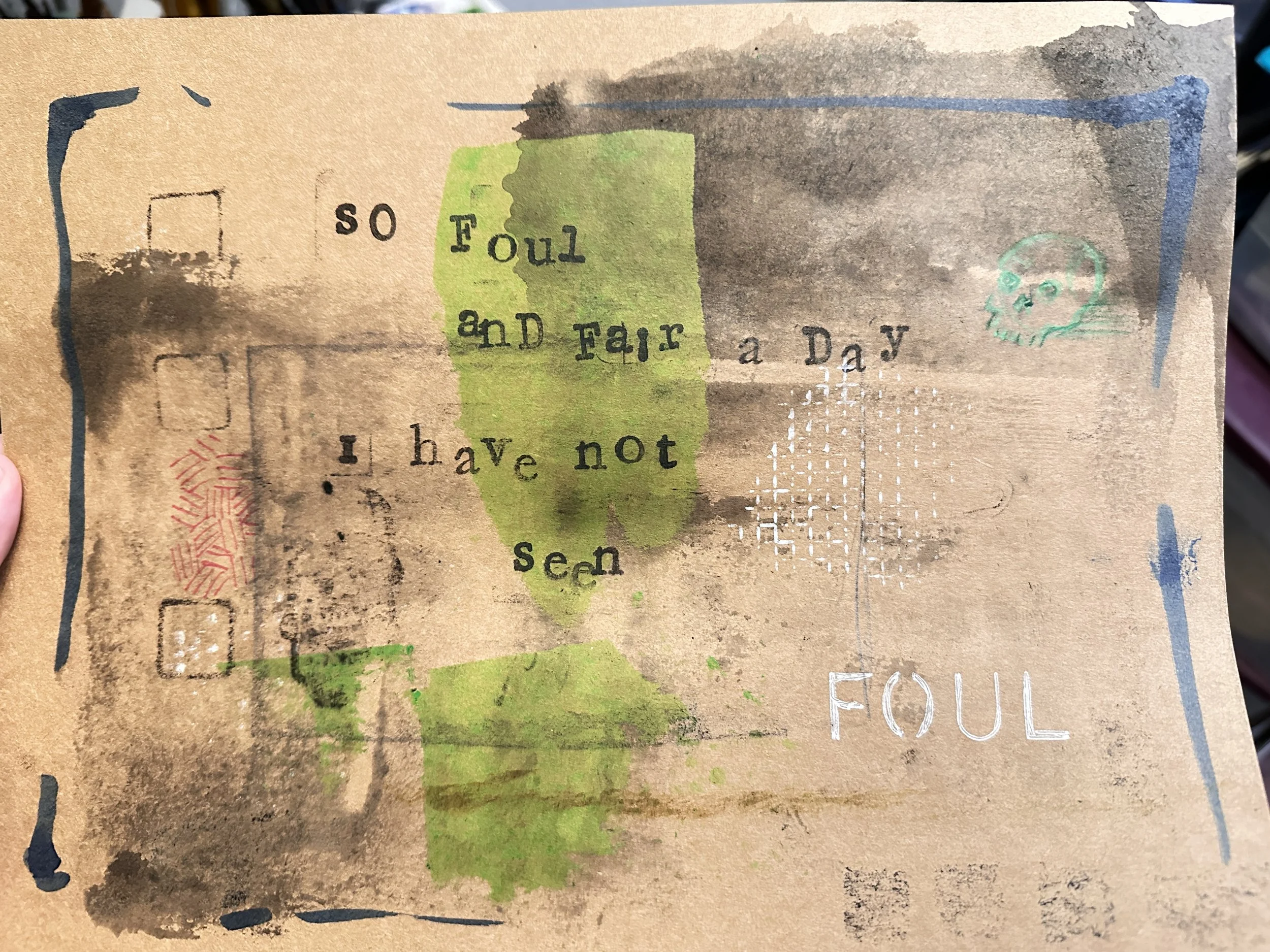

Then I wanted to make a real media piece of art. Something fun and mixed media. Stencils, torn paper, stamps. Whatever I had.

So far I really like this approach. Like little bits of raw material that will eventually turn into a fun little abstract film. But the next step is to try to find some concrete imagery. A character, and environment. Something that the viewer can connect with.

This piece already feels like it belongs in a desert. So I guess the next step is to figure out what kind of short abstract story I can tell in that location.

Wish me luck!

Making Mr B Stuff

For over a decade now, I've been drawing a little character called Mr B. Sometimes, a character just takes on a life of its own. What started as a simple song illustration has grown into a multi-faceted project. I love him, he's one of my favorite little characters to draw.

I made a bunch of watercolors of Mr. B a while ago and then I had enough of them that I decided to turn them into a postcard set. That set did really well in my shop, so I thought about making a second set. Both sets included pens, buttons, and stickers, adding to the Mr. B collection.

I had made enough Mr. B merchandise that I thought it was worth putting them all in a box and having it come with an original canvas painting, and all this fun stuff. This was the limited edition box set.

Then recently, I realized that a lot of those watercolor images that I painted and postcards that I made, with some small revisions would make great iPhone wallpapers. I just had to change the cropping and then extend the tops and the bottoms, which usually meant painting more sky up top and some more flowers and grass down below. And then there was the challenge of coming up with a variety of layouts where Mr. B would not be blocked by any major phone text.

Working on this made me want to make a third Mr. B postcard set which would give me 30 total postcards. Which is a lot, but it would be a nice mega set to have at some point.

I made a Mr. B game a few years ago. It's a really cute little music game where you fly around as Mr. B and play drums, and make music on a little bee sequencer.

I put these wallpapers on my Gumroad site, which is kind of a digital marketplace for independent sellers like me. And now of course, I really wanna make a T-shirt for Mr. B. And then it's time to just go through all of my projects, songs and characters that I have and see what I can make next.

Too many prints

I’ve run out of space in my print drawer! So, I’ve decided to run a print sale in my shop to try and make room for new prints. It’s the biggest print sale I've ever run, with a giant 50% off on all my prints.

I’m trying something new with the sale’s rollout as well. I’m starting with an initial batch of prints on sale, but then every day I’m adding two additional prints to the sale. And I’ll continue this until I run out of prints to add, or the sale ends at the end of the month.

But there are a few challenges that I’m already encountering with this sale. A lot of my prints need to be trimmed down. And since I’m trimming them down, I decided to round all the corners too!

I actually love the look of rounded corners for prints. But it has a practical benefit as well. Corners are the first place that prints get damaged, either during shipping, or simply in handling them. So having rounded corners makes that much better.

But I’ve also realized that I have a bunch of prints that I never really listed in the shop. So now I have to make new listings for them all! Which honestly, is something I should have done ages ago.

A while ago I made the decision to stop shipping large prints. Which was a shame, because I absolutely love larger prints. But they were becoming harder and harder to package, and the shipping costs were pretty bad. But that means I’ve got a fair amount of large prints still sitting around.

So I have to decide if I can re-crop them into manageable sizes, or if I should try to figure out once again how to ship them at full size.

All in all, I’m finding this to be an interesting task. I’m excited to see how the print sale does. I’d love to get my artwork in more people’s homes, and if price was a barrier for some people, hopefully this can ease that.

Illustrating my first Children's Book

My friend Bret wrote a really cool children's book a while ago, and he asked me if I would illustrate it. And I was flattered. Of course. And as soon as I read the book, I was like, immediately, “yes, yes, I will do this”. It's so good.

The first challenge was to design the two main characters. The lead is a girl named Millicent Clarke, who has some issues with the way that her feet look.

So the trick here was to make sure that the character didn't look too young and cutesy, but also that I didn't get too weird and crazy with the design either.

And then there was Doctor Parts. Millicent goes to see Doctor Parts to see if he can help her with her issues, and he ends up making her, synthetic body parts. I wanted Doctor Parts to look friendly, but also to be sort of a caricature. When Millicent sees the billboard of him, I wanted that to be how he looked in real life.

Larger than life. A little bit like Guy Smiley.

Then it was time to figure out what the synthetic limbs looked like. In my first pass, I think I leaned too much into, like, robot parts. And Bret didn't really want to go that direction, but he liked this sort of Westworld looking, 3D printed resin vibe that I did. So we ended up going with that.

Then I experimented with a variety of facial expressions and, got to work on the what the backgrounds might look like. I ended up storyboarding the whole book in these tiny little thumbnail drawings, just to get a sense of how the story would flow, and also to see roughly how many pages the final book would end up being.

And there's something really cool about being able to see the whole story sort of laid out like this.

Backgrounds are always a bit tricky for me. I spend most of my time drawing characters and people and figures. I don't really spend a lot of time drawing environments, so this was an interesting challenge and a bit of a learning experience for me to spend this much time getting this many different backgrounds and environments drawn for this book.

Again, I wanted them to have a storybook feel, like a classic storybook feel, but I also wanted them to have some texture and some interest.

But they they needed to not compete with the main characters, so they had to be pushed back a little bit to let the characters stand out.

I'm such a sucker for seeing my own work in print. It never ceases to amaze me that feeling of making something on my own, in my room, in my studio, and then in the mail, getting 20 copies of a book filled with my own artwork that I drew. It's just, it's a really cool feeling.

But there's also a bit of sadness that happens when you finish a project, when you're like, “oh, like, I guess it's done". And the only way for me to really deal with that is to start a new project.

Filling a sketchbook with creepy dolls

I've always been fascinated by antique dolls and figures and old sculptures and all those knickknacks that people used to collect. Fascinated and terrified a bit, I guess. So years ago, I got a couple books out of the library on antique dolls, and I started a sketchbook dedicated solely to that theme.

I sketched a bunch of different dolls and tried to stay faithful to the drawings, but added my own twist or flair wherever I saw that I needed it.

And then I just kind of forgot about that book.

Not that long ago, I showed it to Sam and she fell in love with a bunch of drawings and wanted to make real versions of them. Sew them, or repurpose old dolls.

There's something cool about how decayed and decrepit they looked.

Then recently we were at a thrift store, and she actually found one of the books that I reference, like the exact book that I reference. So we matched up all the poses and found the exact same photos that I drew from.

So that got me interested in kind of picking that sketchbook up again and seeing if I could finish it. I had only drawn in half the book, so I had the whole extra half to to finish.

When I originally started the book, I for some reason I only drew on the right side, probably because I don't want to smudge it or whatever. So then later, two years later, I went back and redrew on the left side the drawings that existed on the right side. And that was fun for me to sort of, get the initial drawing out whatever way I could and then redraw them with like, cleaner lines or simpler strokes and just try to simplify those things and make some decisions about them.

This time I decided to use a couple different types of media. Some a little bit of marker, a little bit of ink, a little bit of pastel. Not too much because the sketchbook isn't really well suited for that, but just enough to give these, these new drawings a different look from the old stuff.

I have trouble finishing sketchbooks, and I used to think that was a problem, but now I think I'm okay with it because it allows me these pleasant little surprises like this, where I can go back to these old sketchbooks and just sort of continue the theme.

The Zoom PS-02

I have a metric ton of music gadgets that I've collected over the years. I finally built a shelf system to display them all properly, and now they sit above my computer desk. The funny thing is, I forget they’re even there! Which is ironic because the main reason I bought them was to get me away from my computer.

When I'm working on music, I usually open up Logic or Ableton and spend most of my time playing around with plugins, using a Midi keyboard to play synthesizers and make drum beats. It's all inside the computer, which means I'm sitting at my desk for hours on end.

I got all these little gadgets so I could step away from the screen for a bit. Since they’re battery-operated, I can take them on walks, to a cafe, or even to Panera. I can just sit, have lunch, and make some music with headphones on.

I also like using them because they're very tactile. There are buttons and knobs and all these things I can move, manipulate, and play with—things that aren't a computer mouse! When you're making music on a computer, you're usually using your mouse to turn on these virtual knobs and dials. It just feels cooler to do it for real.

My Go-To Gadget: The Zoom PS-02

One of my go-to gadgets is this old Zoom PS-02, which stands for Pocket Studio. It's basically a little four-track studio where you can sketch out song ideas quickly. It has a built-in, very simplified drum and bass machine, and then you have three tracks of audio, which I can use for fun little harmony parts.

My favorite thing to do is use the delay effect as a short phrase looper. I can make a sound and have it repeat, then add to it and build up a fun little rhythm or drum part. Then I can add little harmony lines, and things build up pretty quickly.

It's a pretty noisy device, but one of the things I like about the delay effect is that it actually trails off after a certain amount of time. There's a decay on it. So if I start with some kind of beat, eventually, after like a minute, that beat will kind of decay into the background, and I get to reinforce it with a new beat. It's all very silly and experimental and fun.

I do it mostly as an exercise, a way of warming up or coming up with ideas. But the machine is so noisy that I'm not often able to use anything I make with it in an actual song. I can clean it up a little bit with some noise reduction software, but it's still not great.

Finding Balance

There are times when I need to sit down at the computer and really work on a song properly. I need the precision that editing with a mouse gives me, being able to move things around that way. And I need the control and robustness of a full digital audio workstation.

But sometimes I just want to play with sounds and make some noise. And that's why I love having access to all these little gadgets.

The Zoom PS-02 is a perfect example of a gadget that lets me embrace the limitations and make something from nothing. I have to be creative and resourceful, and the results are always surprising.

It's a good reminder that making music doesn't have to be complicated or expensive. Sometimes the simplest tools can be the most inspiring.

Revisiting a 20-year old art project

Years ago, I had an art project called, Introspective. I ended up having a whole art show around it, and eventually I made it into an art book. But recently I've been revisiting it, mostly trying to find ways to add motion to the old pieces. I’m planning to turn the whole thing into a sort of experimental short film. But on the way to that, I'm hoping to get a bunch of fun new pieces.

Back when I was working on this art project, I scanned all my watercolor and mixed media pieces. Some were too big to fit, so I had to stitch them together from multiple scans.

It's fun to see the whole project laid out like this. Like a bird's eye view.

I'm taking these old pieces, and trying to find ways to animate them. It's been a fun challenge, and hopefully it all ends up stitched together into something cohesive.

I bought a bunch of fun stamps and letterforms to add to my recent mixed media pieces. The trouble is organizing them!

Drawing bees. Always drawing bees.

I love bringing this stuff into my iPad to animate it. It feels like a good way to bring new life to these old pieces.

Getting out of the studio

Studio time is important for creative work, but it's easy to get caught up in it and forget to take breaks. For a while, I had built a habit of taking walks, particularly after lunch, to clear the mind and get some exercise. Fresh air and a change of scenery can be good for everyone, and even a small amount of exercise can be beneficial. However, when winter arrived in Colorado, the cold weather made it difficult to maintain the routine of daily walks, and the habit was broken.

Once the weather warmed up again, it became a struggle to remember to go for walks and to recall how beneficial they had been.

Being in the studio can be very absorbing, as it's the place where all the my stuff is! All my art supplies and music equipment are here. It's easy to get caught up in projects and find reasons not to leave the house. I have a strong tendency to be a bit of a hermit, and spending too much time in the studio can reinforce that inclination. As I’ve grown older, I recognize the importance of making a conscious effort to get out of the studio and go for walks. It helps disrupt the monotony of studio life and provides a sense of renewal. Walks offer a different kind of distraction from the work in the studio, allowing the mind to wander and be refreshed. Oftentimes, I’m able to find inspiration in the sounds of nature and the environment, which contrast with the typical distractions of studio work.

I can focus on the sounds of the wind rustling through the trees, the steady hum of traffic, and the rhythm of my own footsteps. These create a kind of soundscape that sometimes sparks new ideas. And of course it’s a nice break from the intensity of studio work.

Of course it’s important to have dedicated studio time for completing projects, whether I’m working on art or music, but sometimes the best thing for my creative process is to actually get out of the studio.

Making hand-made books: Tab Binding

making hand-made books: Tab Binding

I've been really into making handmade books lately. Small ones. More as art pieces than anything else. But I'm still a bit intimidated by bookbinding and stitching. So when I found a YouTube video demonstrating a no-stitch binding method called tab binding, I was intrigued. I was eager to try a binding method that seemed more approachable for a beginner like me.

Tab binding is a unique technique where you bind each sheet, or signature, together with small tabs. The result is a book that feels special, almost like an artifact or a discovered treasure. The exposed tabs add a visual element that elevates the book beyond a simple collection of pages.

Here's what I love about tab binding:

No stitching required: This is a major plus for anyone who finds sewing intimidating.

Unique look: The tabs create a distinctive aesthetic that sets tab-bound books apart.

Artifact feel: The exposed binding gives the book a handmade, almost ancient quality.

Now that I have a few of these little books constructed, I'm excited to start filling them. My initial thought is to use them for tiny drawings and diagrams. I love the idea of creating miniature worlds within these compact pages. But I'm also considering using my itty bitty thermal printer to create tiny books of quotes and poems.

Working on these handmade books has brought about a welcome shift in my creative process. Lately, I've been too focused on making art with the intention of selling it. And that puts you in a different headspace – one that can sometimes stifle creativity. But with these little books, I haven't been thinking about selling them at all. It's been nice to simply make something for the fun of the process. It's a good reminder of why I make art in the first place. The freedom to create without the pressure of commercial viability has allowed me to reconnect with the pure joy of making.

Starting a new song from a random prompt

As a songwriter, I’m always looking for ways to shake up my creative process. I know that if I rely on the same familiar themes and musical ideas, my songs will start to sound predictable. One of my favorite songwriting exercises is to start a song with a random word or phrase .

And my favorite source for random prompts is a website called Watch Out for Snakes. It’s a great tool for generating all sorts of random content, from words and phrases to entire lists. While I don't always love what it gives me, it's a good way to get my brain working in new directions. This time, I decided to generate a random sentence.

I didn't super like what I got, but I liked the word “mathematician”. And so as I was thinking about things, I started thinking about someone who's trying to math their way through love. I imagined someone trying to apply logic and reason to something as messy and unpredictable as love, and I thought there was something interesting there.

So I took that random phrase and entered it into a new sample pack generator that Output has.

Usually you would type things like “trance beat at 100 bpm” or “happy piano solo in the key of D”, you know, something like that, something a little more musically descriptive. But I thought it would be fun to just try this nonsense phrase and see what sample packs it would generate for me.

Once I listened through a few of the ideas, I really dug it. Sort of this melancholy piano part that completely fit the concept that I was going for. With the piano sample as my foundation, I started building the song in Logic Pro. I chopped up the sample, rearranged some of the pieces, and added some of my own piano playing to reinforce the bassline and modify the phrasing a bit.

At this point, I started hearing a bit of a melody in my head, this little idea, and I thought, what could that be? So first I wrote, “sometimes it doesn't add up”. Thinking I would try to use as many math puns as I could, but I ended up changing it to “It's true, it doesn't add up”.

I wanted to add some other instrumentation to the track, so I explored the other samples in the pack. I brought in some flute and a little bit of strings. The strings ended up being a bit too overpowering, so I turned them down in the mix. I also found a really cool acoustic guitar part in the pack that I liked, but it didn't quite fit with the first section of the song. I decided to add it in as a separate section, and this decision ended up changing the entire direction of the song.

The addition of the acoustic guitar created a natural division in the song. The first part, with the piano and the more subdued melody, felt like an intro. The second section, with the acoustic guitar, took on a faster, more rhythmic feel.

I love working this way because it forces me to think differently about songwriting. I wouldn't have come up with this particular song, with its unique concept, instrumentation, and structure, if I had relied solely on my usual methods.

This experience has reinforced the importance of experimentation and play in the creative process. Sometimes, the best way to overcome a creative block is to simply try something new and see where it leads you. Even if you don’t end up with a finished product, the process of exploration can be incredibly rewarding. It can teach you new things about your craft and open up new creative pathways that you might not have discovered otherwise.

Spoopy Playlist!

I put together a tiny little spooky (and spoopy!) playlist to lift your Halloween spirits!

Covering Blank Space

A few years ago, I came across Ryan Adams's cover of Taylor Swift's "Blank Space" and loved it immediately. I loved the unique, raw, and earthy sound Adams brought to the song. He'd transformed a pop anthem into a soulful folk tune, a transformation he applied to Swift's whole 1989 album.

So of course I wanted to try my own version, but I wanted to keep that mellow acoustic vibe. And my first attempts weren't good. I couldn't get a sound I was happy with. My guitar playing was mediocre, and my recording setup wasn't great. I was struggling with both the sound of my guitar and the recording itself. The limits of my equipment, along with my lack of skill, were big obstacles. I was getting frustrated.

So I gave up.

Fast forward to a few months ago, I found something that made me want to try again.

I was playing around in Logic, and I rediscovered a Native Instruments plugin called Strummed Acoustic. It felt like a breakthrough. The plugin could enhance the natural acoustic sounds of my guitar, adding a depth and texture I'd been missing. Pairing that with my own guitar recording started to actually sound cool.

So I was back on track!

This whole experience has shown me how important it is to keep going. Even with the early setbacks and frustrations, I still wanted to create something beautiful and meaningful. That desire kept me inspired.

Covering "Blank Space" was a process of learning, adapting, and finding new possibilities. Re-imagining a song is about more than just replicating the original; it's about finding a way to make it your own, infusing it with your style, and adding your own fingerprint.

Ryan Adams did that with his version, and I hope I was able to do it with mine.

Calvin Klein lyric video

Well I’ve been busy trying to get all the lyric videos done before the full album comes out next month, and it’s been a whirlwind!

So far, I’ve gotten 1.21 Gigawatts done, as well as Hoverboard. And as of yesterday, I’ve got Calvin Klein finished as well!

All three videos are on the No More Kings official Youtube channel, here: https://www.youtube.com/@nomorekings

Making clay magnet faces

I bought a 3D printer a while ago and started using it to make some fun things.

And then I had my first fail, and I kind of got gun shy. And I never really got back to using it.

But during that time, I had this idea of printing these zombie face magnets, and I thought that they'd be really cool.

Later, after the printer failed, I went back to these magnets and looked at them and thought, “you know, it's cool that they're 3D printed, but they don't they don't have to be. Like, These could easily be sculpted”.

My little zombie friend watches on. He’s very encouraging.

I have all these interesting colors of clay, and I have this cool metal shape that you can cut out ovals and I thought that might make a cool starting size. And then I could just see how much variety I could get from these faces. And I would experiment with some being kind of horizontal and then some being sort of vertical long faces.

And then I can just play with the features and see how much variety I can get there. Give them really simple mouths. But I could play with this other stuff like bags under their eyes or like mustaches or eyebrows. I could give them really crazy noses.

So it started out as sort of this exercise in minimalist design. Like, how much personality can I get out of these guys with the simplest shapes?

Sometimes when I'm in the mood to make things, I get overwhelmed by all the choices and the possibilities. And so to combat that, I like to give myself a really small box to work within.

So this was fun being able to make a variety of characters from very simple colors and very simple shapes.

You can watch the whole studio vlog on my Youtube channel. I’ve got six studio vlogs up already.

https://www.youtube.com/daspetey

I’ve also got some of these little magnet faces for sale up on my Etsy shop, if you’re interested in decorating your fridge. https://www.etsy.com/shop/daspetey

Eating Ghosts (ADAM Music Project, featuring No More Kings)

A while ago, my friend Adam, asked Neil and me to be part of his new Pac-man inspired song, Eating Ghosts. Adam has a project called the A.D.A.M. Music Project where he collaborates with a bunch of really cool artists on songs inspired by video games. So when he asked Neil and me to be part of a Pac-man one, we were psyched!

But the really fun part is, immediately after release, the song got picked up on one of Spotify’s huge editorial playlists! https://open.spotify.com/playlist/37i9dQZF1DX23YPJntYMnh?si=c25c38cec0014fc3

It’s not very often that I sing on songs that I didn’t write, but honestly, this feels like the kind of song NMK would’ve thought of anyway. When Adam pitched me the idea, I was like, “oh man, why didn’t I think of that?”

After we recorded the song, Adam wanted a fun lyric video to debut it with. So he asked me to come up with one. I tried to reference the game vibe as much as I could for the video. You can check it out here: https://www.youtube.com/watch?v=TXmAHPGDbM8

Adam asked me to sing on another song too. This time it’s about Yoshi! I’ll post about that one soon.

Ink scape

A while ago, I made some fun ink wash pieces on watercolor paper. They were essentially abstract pieces where I dropped ink onto the paper and let it form random blobs. Then I went over it with white ink to create concentric circle patterns. I did a series of about six of these.

Later I re-discovered the pieces, and I think it would be fun to give them some motion. So I brought them into Disco Diffusion and used them as the initial seed image for the AI to create an animation from.

The Matrix and AI

i’ve always loved the Matrix. The first movie has a special place in my heart. I saw it in theaters when it came out, knowing absolutely nothing about it. I was blown away.

So naturally as AI tools started becoming more and more accessible, I decided to see what AI would do with the Matrix.

Specifically, I rendered out 1500 frames from the movie as stills, and trained an AI on that dataset. The imagery it produced wasn’t that interesting. So I tried a few more AI approaches.

I’ve had this problem before when training AI models on large datasets of non-similar imagery. It sort of breaks. It doesn't really know what kind of imagery i’m looking for, so the results are messy.

A similar thing can happen when the dataset is too small. It picks one or two images and keeps repeating them. Neither of those things are what I want.

AI generated morph

The next tool I tried was an AI model called Looking Glass. Looking Glass works by taking an image, or group of images as input, and then generated variations of those from its pre-trained datasets. The stuff it creates can be pretty out there.

Aaaand that wasn’t much better. You can tell what it’s trying to do, but it keeps missing the mark.

That’s ok, i’ve got a few more AI tricks up my sleeve. Next is Disco Diffusion.

Now we’re getting weird! Disco Diffusion also has a mode to create video, by moving through an image and continuously putting it through the algorithm. So I fed it a still frames and let it do its thing.

The Matrix has you

This idea has potential. I definitely want to experiment with more still frames, and see how weird things can get. But this one takes the longest. Usually I have to leave it overnight and check it in the morning. So I had one more AI tool to try before bed: Midjourney.

At first, Midjourney was giving me results that were “too good”. Or rather, too close to the source material. It started to look like the Matrix, just re-cast.

So I decided to add some other terms to the prompt. “Retro mod illustration” got me this:

I kind of love this direction! This kind of output makes me feel like exploring my own illustration in this style. There’s a lot more I think I can do with this concept of sending still frames from my favorite movies through AI. And there’s a lot of different styles I can explore with the help of AI. This kind of stuff really excites me.

Maybe Fight Club next?

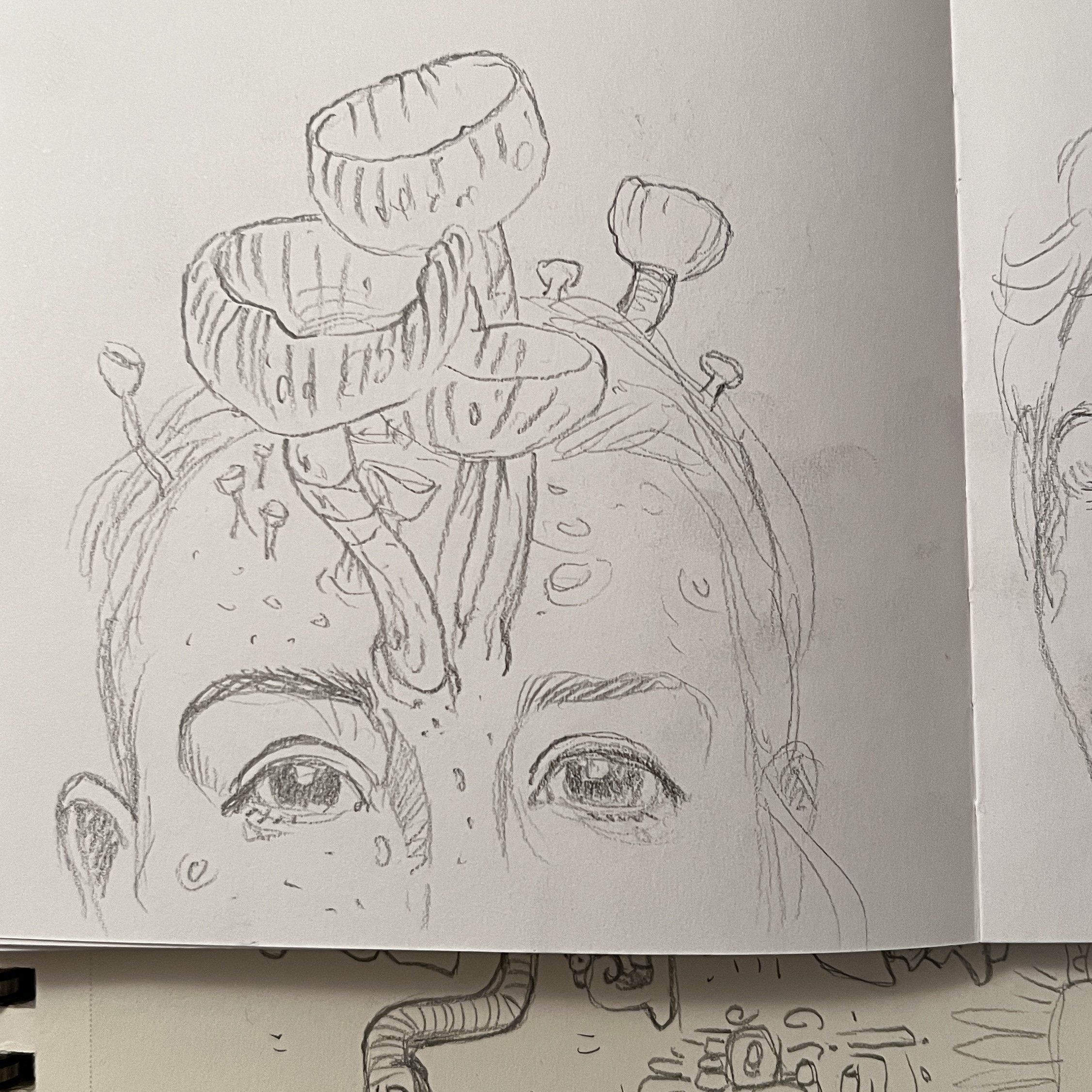

Mushroom People

I don’t know what started my recent fascination with painting portraits of people with mushrooms growing out of their heads, but it’s a thing now. I’ve filled a few sketchbook pages with them, and I’ve even done a few acrylic paintings.

Since my recent addiction to using AI to inspire new art pieces, I’ve also started training AI models on this stuff, to see what new imagery it can come up with.

In addition to the weird stuff above, I used a few other AI tools to give me some variations on my images. The results were varied, and disturbing. I’m really falling in love with the process of sending my art through AI models. It really feels like collaborating with an alien version of myself.

The next step for me is to take my favorites from the AI output, and sketch my own versions. Then I can work those up into paintings and send them back through the AI to see what it does with those.

I’m also trying to make sure I keep this project separate from my Cabbagehead project. I want to make sure each one has its own feel.

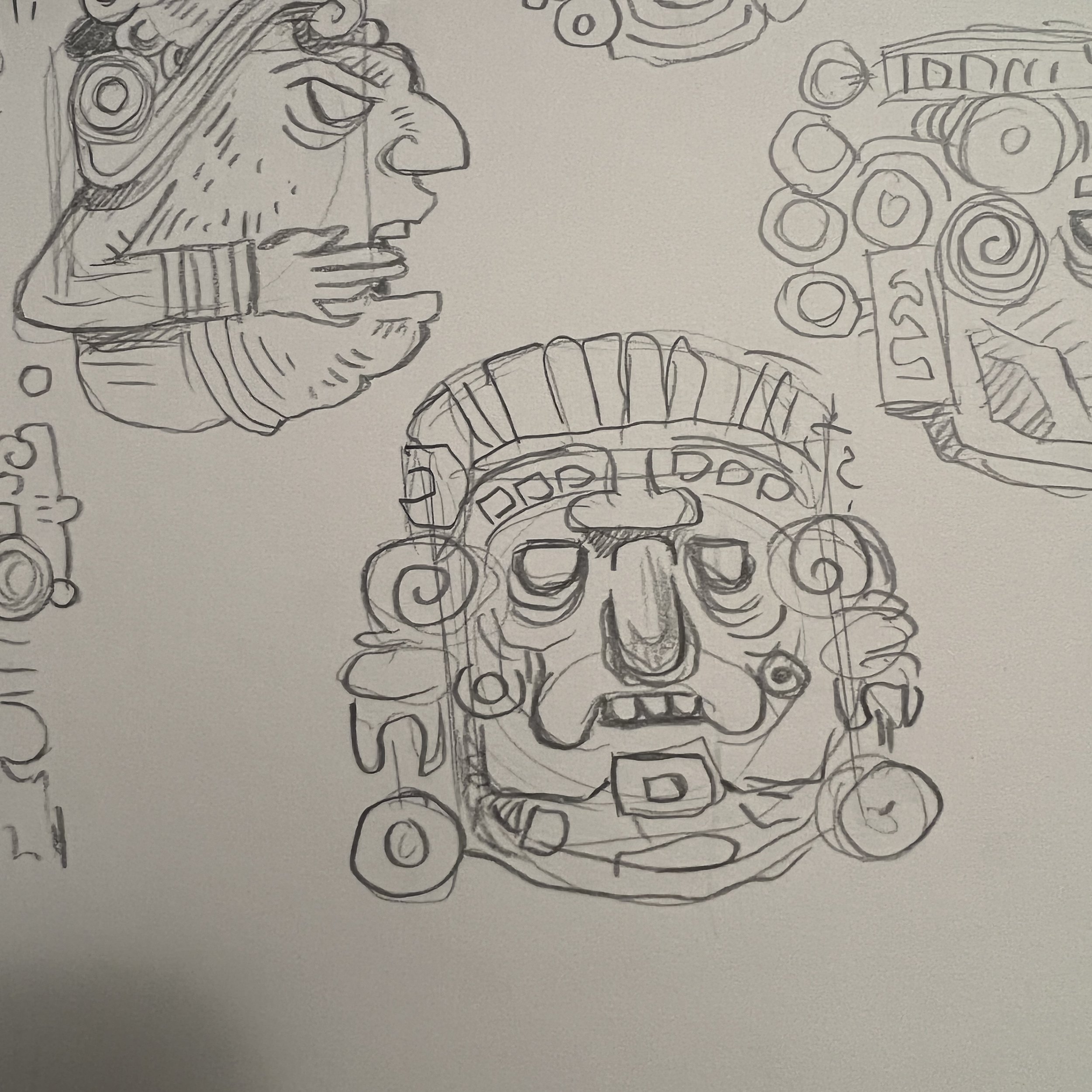

Making more Mayan-inspired art, with the help of AI

I've been working a lot on my Mayan-inspired art project called Lightbearer lately. And recently I started using AI to help me come up with ideas and variations on imagery.

This batch of four marker pieces was based on some of the output from my AI exploration, mixed with a little Mayan art reference.

I’ve been working on this series for a long time now, and the stuff I’m making ranges pretty widely in how much it references Mayan art. Additionally, I’ve recently been fascinated with using AI in my process. I use a variety of AI tools, in a variety of ways.

Here’s some of the output from one session working with AI.

These images were generated using Dalle-mini, which is a text-to-image generator. These types of generators take a while to dial in a look that you want. For me, I’m just looking for it to give me new ideas. I don’t need the AI to give me a final art image.

So once I get some fun, usable stuff from the AI, I immediately start filling a few sketchbook pages with sketches. When I’m doing these, I’m not concerned with replicating the AI output. I’m trying to “collage” the best bits of the output into new single images.

The whole process is super fun for me. I love seeing what the AI makes. It feels a little like fishing. Each session, I’m just trying to catch a few good fish. And of course I love sketching, and honestly, I always feel like I should be sketching more than I do.

So that’s it! Please let me know if you have a method for using AI or other generative art methods in your own art making!